Otolaryngologists at NewYork-Presbyterian/Weill Cornell Medicine are exploring ways to use machine learning to improve screening and monitoring of voice pathologies and swallowing dysfunction.

Voice changes and difficulty swallowing can be symptoms of head and neck cancer or develop as a side effect from radiation treatment. They may also be indicative of a range of other disorders. “Cough sounds can be potentially used as screening tools for particular respiratory diseases, and you could also use speech for detection of neurological conditions, especially for dementia and movement disorders such as Parkinson’s disease,” explains Anaïs Rameau, MD, MSc, MPhil, Chief of Dysphagia at the Sean Parker Institute for the Voice within the Department of Otolaryngology at NewYork-Presbyterian/Weill Cornell Medicine.

Machine learning a type of artificial intelligence (AI), is being developed on multiple fronts to improve detection of disease, monitoring of symptoms and treatment, treatment planning, and even rehabilitation, says Dr. Rameau who recently co-authored a review on the current applications of machine learning in head and neck cancer, published in Current Opinion in Otolaryngology & Head and Neck Surgery.

“Voice is a source of important digital data that has been under explored because until today we just didn’t have the capacity to analyze this complex signal,” she says. “Now voice and speech data can contribute to an era where we can have precision medicine at the level of the patient with other types of digital data, such as that captured by wearables.”

Linking Biomarkers to Laryngological Conditions

Dr. Rameau and her colleagues are participating in Bridge2AI, a project funded by the National Institutes of Health Common Fund that seeks to create a diverse voice database linked to health biomarkers that will train an AI algorithm to build predictive models for screening, diagnosis and treatment. The multi-institutional project is collecting data through smartphone applications linked to electronic health records, focusing on five disease areas that are associated with voice changes:

- Vocal pathologies, such as laryngeal cancer, vocal fold paralysis

- Neurological and neurodegenerative disorders

- Mood and psychiatric disorders

- Respiratory disorders

- Pediatric disorders, such as autism spectrum disorder and speech delay

“We’re working on seeing whether voice can be used as a biomarker of specific diseases so that you could potentially screen and monitor patients in real world settings and remotely using their voice and algorithmic analysis,” Dr. Rameau says.

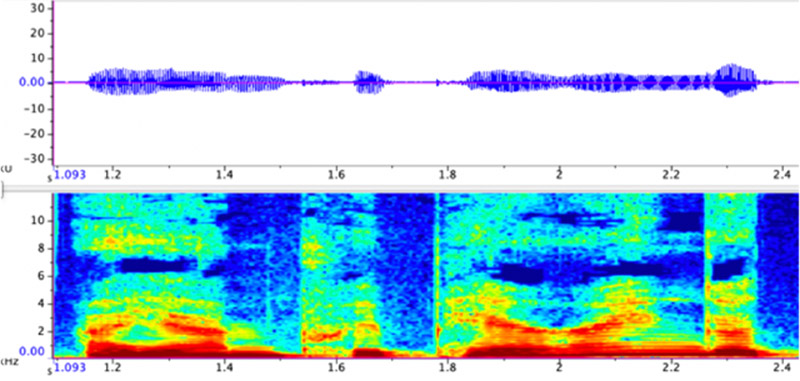

A waveform and spectrogram of Dr. Rameau’s own voice using Raven Pro software.

The project will also be critical to addressing one of the drawbacks of machine learning, namely that the tools are only as good as the data that goes into them. By collecting data from a diverse population, investigators are aiming to ensure it is applicable to a broad population of patients.

“If you don’t train the algorithm with data from diverse populations, then the algorithm may work well in a subset of the population but may work poorly in another subset,” Dr. Rameau says.

Dr. Rameau has also received a grant from the National Institute on Aging to examine voice as a biomarker of swallowing difficulty. Swallowing difficulty becomes more common as people age but there is not a reliable screening tool to help predict the onset of these problems. Dr. Rameau is aiming to create a voice-based tool that would allow for better identification of patients who could benefit from changes to food texture, oral care, or potential swallowing rehabilitation to help prevent swallowing difficulties.

“These are simple measures that could really benefit our older adult population,” Dr. Rameau says.

Increasing Access to Advanced Technologies

Dr. Rameau is also working on research that would apply AI to laryngoscopy, a diagnostic procedure used to examine the vocal folds and tissue in the larynx. AI technology could assist in identifying pathologies during the procedure or it could function as a “co-pilot” for physicians who perform these procedures less frequently, such as anesthesiologists and emergency physicians.

“My personal mission is to educate peers on the scope of what can be done with AI, and ultimately create tools that increase access to care,” Dr. Rameau says. “There are vast regions of the country that don’t have access to specialists, such as laryngologists. Patients everywhere deserve to have the best care and I’m hoping that with these technologies, we can allow people in remote areas to get specialized care.”

Patients everywhere deserve to have the best care and I’m hoping that with these technologies, we can allow people in remote areas to get specialized care.

— Dr. Anaïs Rameau

Dr. Rameau urges her colleagues not to be intimidated by AI and machine learning. For these technologies to reach their potential of helping patients, clinician input is critical. “We have to be engaged because engineers will not do it well. We’ve got to represent our patients and we’ve got to be involved in this process,” she says.